Research Projects

From home automation that reads our wishes from our eyes to smart devices in everyday working life, to systems that coordinate the deployment of emergency services or organize social mobility: The computer-controlled devices around us are becoming more and more inconspicuously integrated into our lives and at same time are becoming increasingly intelligent thanks to the interplay of sensors and ever-improving analysis options. They can make our everyday lives easier – but at the same time, one key challenge is becoming increasingly clear: it is often not intuitive how these devices can be controlled.

This is where the priority program “Scalable Interaction Paradigms for Pervasive Computing Environments” comes in. “Being at home should not feel like[…] being inside a computer”: this sentence in the official application text makes it clear to everyone where we should not be going.

But the focus goes far beyond the home: this natural way of interacting should not only make sense for individuals but also feel coherent for different people interacting with the various devices distributed around the room.

To this end, the consortium of researchers from all over Germany and from many different disciplines is looking at interaction with pervasive computer environments from different perspectives. The experts from the fields of computer science, human-machine interaction, psychology, visualization, and media studies have jointly developed three different reference scenarios, i.e. application examples for intelligent computer systems, in the context of which they answer their research questions:

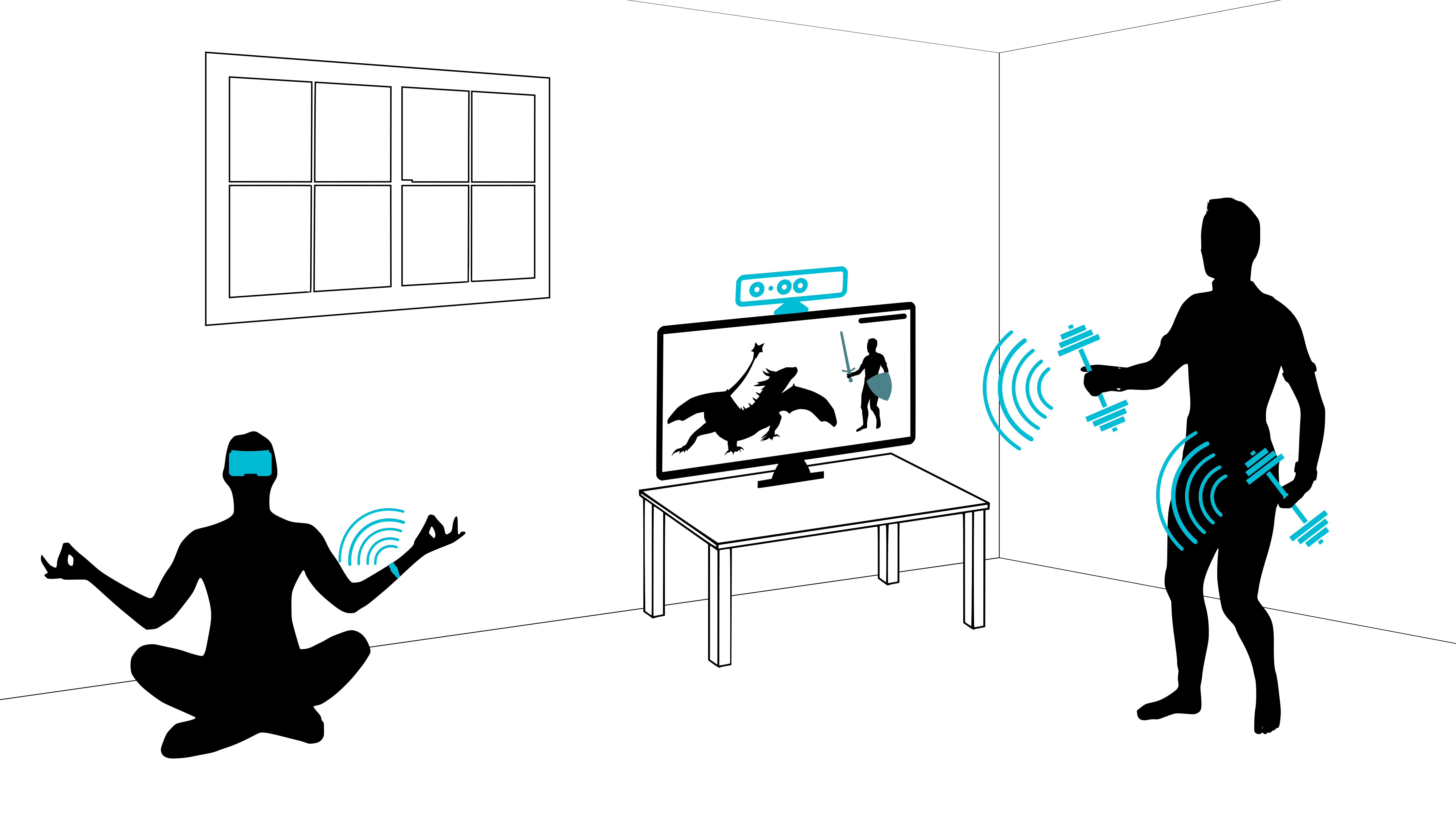

- Personal smart spaces

Here, the scientists are looking at private spaces, i.e. places that people are familiar with and share with friends or family – but rarely with strangers. Various smart devices interact with each other and with people: Entertainment electronics from residents and their guests, or devices that are connected to a “smart home” control system to make life in your own home more efficient and enjoyable. - Public smart spaces

A public smart space could be, for example, a residents’ registration office or a smart system in a retail store The space is unknown to the people who use its functionality and they share it with strangers. Various interactive devices are used here, such as displays, cameras, and sensors. - Intelligent control rooms

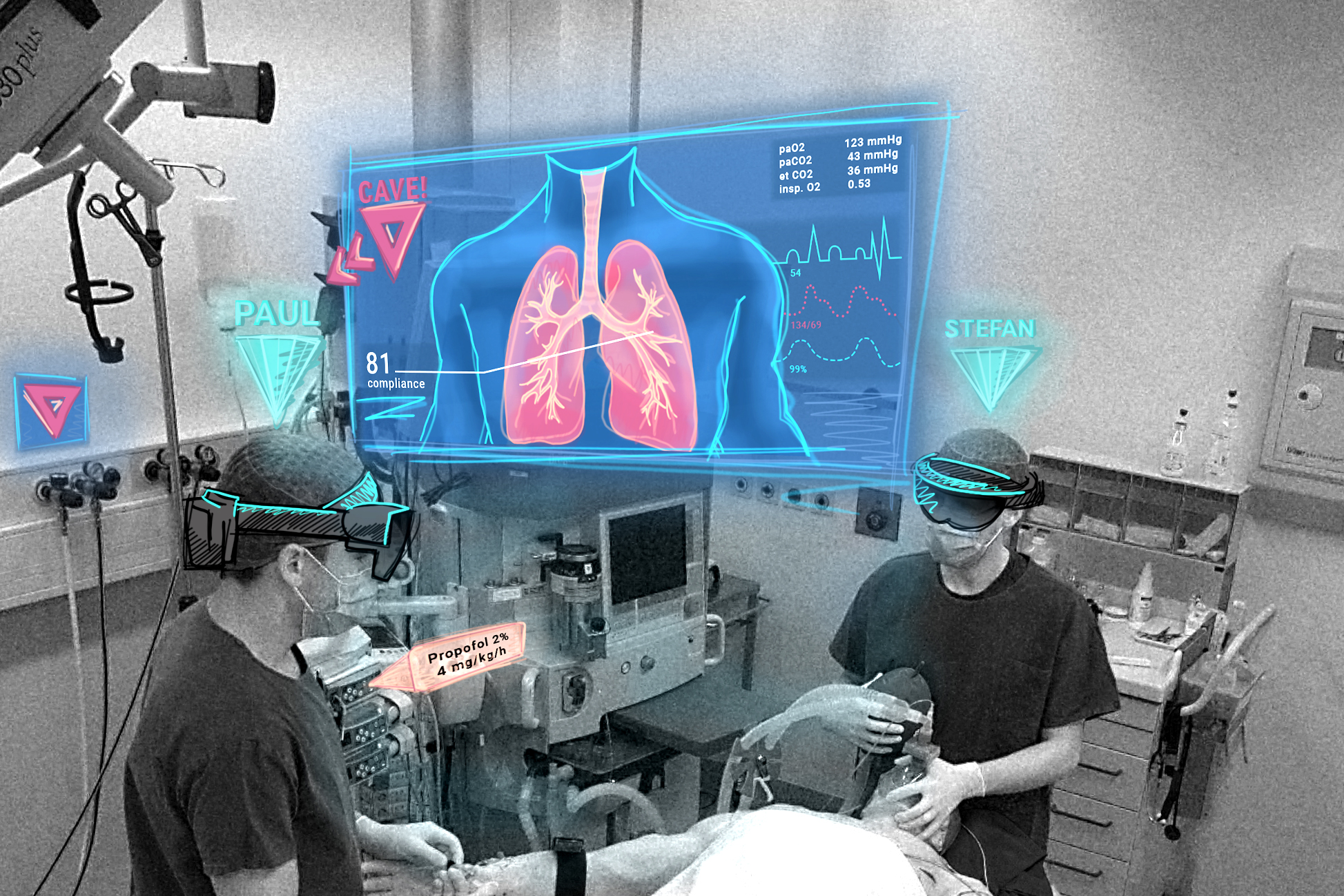

Control rooms or control rooms are highly specialized spaces such as the command bridge of a ship or a rescue control center. The devices that interact here and with which the people here interact are used to organize and carry out professional and potentially safety-critical tasks. Unlike in the other reference scenarios, there are clear tasks, routines, and objectives in these rooms and a controlled environment in which it is defined which people fulfill which roles.

The following are all projects listed in alphabetical order. More information about each project, related publications and researchers can be accessed on the respective project’s page.

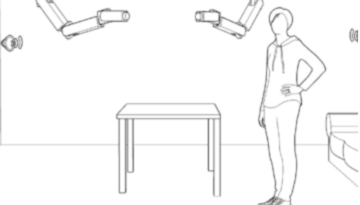

Beyond safety and efficiency in acute care: The experience of an embodied staff-environment interaction

Gestural interaction paradigms for smart spaces (GrIPSs)

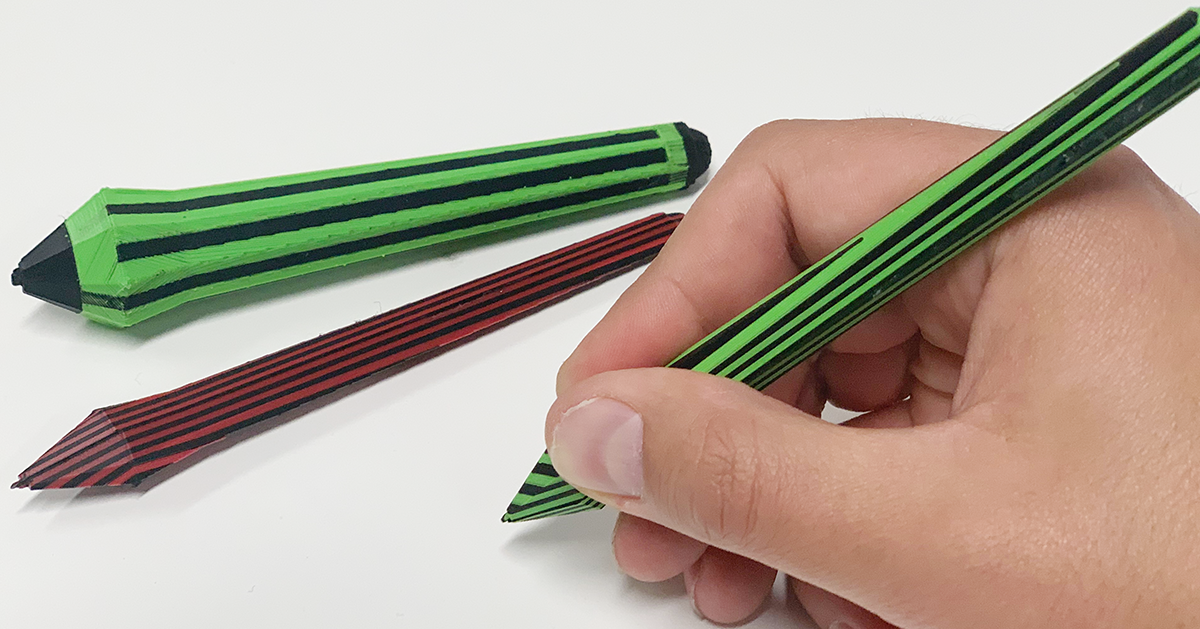

RIME – Rich Interactive Materials for Everyday Objects in the Home

User Interaction Concepts based on Prehensile Hand Behavior